Google Data Analytics Professional Certificate - Part 2

This is part of a series of posts on the Google Data Analytics course from Coursera. It is not meant to be a review of the course nor by any means an extensive overview of its content. This is intended to be short and incorporate only the main concepts and learnings I gathered from each module. My purpose for these blog posts is mainly to consolidate what I learned from the course and also an attempt to help anyone who might be interested in reading a little bit about these subjects/this course.

In this post you will find a mix of direct content from the course, my own personal notes and also some extrapolations and additions I made wherever I felt the need to add information.

Ask Questions to Make Data-Driven Decisions

Solve problems with data

Data analysts typically work with six problem types:

Making predictions: A company that wants to know the best advertising method to bring in new customers is an example of a problem requiring analysts to make predictions. Analysts with data on location, type of media, and number of new customers acquired as a result of past ads can't guarantee future results, but they can help predict the best placement of advertising to reach the target audience.

Categorizing things: An example of a problem requiring analysts to categorize things is a company's goal to improve customer satisfaction. Analysts might classify customer service calls based on certain keywords or scores. This could help identify top-performing customer service representatives or help correlate certain actions taken with higher customer satisfaction scores.

Spotting something unusual: A company that sells smart watches that help people monitor their health would be interested in designing their software to spot something unusual. Analysts who have analyzed aggregated health data can help product developers determine the right algorithms to spot and set off alarms when certain data doesn't trend normally.

Identifying themes: User experience (UX) designers might rely on analysts to analyse user interaction data. Similar to problems that require analysts to categorize things, usability improvement projects might require analysts to identify themes to help prioritize the right product features for improvement. Themes are most often used to help researchers explore certain aspects of data. In a user study, user beliefs, practices, and needs are examples of themes. The difference between categorising things and identifying themes is that categorising things involves assigning items to categories; identifying themes takes those categories a step further by grouping them into broader themes.

Discovering connections: A third-party logistics company working with another company to get shipments delivered to customers on time is a problem requiring analysts to discover connections. By analyzing the wait times at shipping hubs, analysts can determine the appropriate schedule changes to increase the number of on-time deliveries.

Finding patterns: Minimizing downtime caused by machine failure is an example of a problem requiring analysts to find patterns in data. For example, by analyzing maintenance data, they might discover that most failures happen if regular maintenance is delayed by more than a 15-day window.

Craft effective questions

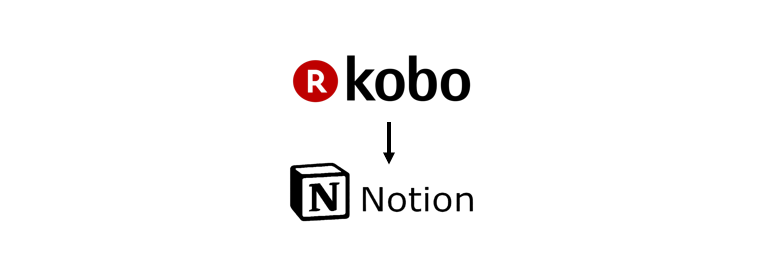

Highly effective questions can be structured as SMART questions:

Figure from the Google Data Analytics course on Coursera.

Here's an example that breaks down the thought process of turning a problem question into one or more SMART questions using the SMART method: What features do people look for when buying a new car?

Specific: Does the question focus on a particular car feature?

Measurable: Does the question include a feature rating system?

Action-oriented: Does the question influence creation of different or new feature packages?

Relevant: Does the question identify which features make or break a potential car purchase?

Time-bound: Does the question validate data on the most popular features from the last three years?

→ Questions should be open-ended. This is the best way to get responses that will help you accurately qualify or disqualify potential solutions to your specific problem. So, based on the thought process, possible SMART questions might be:

On a scale of 1-10 (with 10 being the most important) how important is your car having four-wheel drive?

What are the top five features you would like to see in a car package?

What features, if included with four-wheel drive, would make you more inclined to buy the car?

How much more would you pay for a car with four-wheel drive?

Has four-wheel drive become more or less popular in the last three years?

→ If you have a conversation with someone who works in retail, you might lead with questions like:

Specific: Do you currently use data to drive decisions in your business? If so, what kind(s) of data do you collect, and how do you use it?

Measurable: Do you know what percentage of sales is from your top-selling products?

Action-oriented: Are there business decisions or changes that you would make if you had the right information? For example, if you had information about how umbrella sales change with the weather, how would you use it?

Relevant: How often do you review data from your business?

Time-bound: Can you describe how data helped you make good decisions for your store(s) this past year?

→ If you are having a conversation with a teacher, you might ask different questions, such as:

Specific: What kind of data do you use to build your lessons?

Measurable: How well do student benchmark test scores correlate with their grades?

Action-oriented: Do you share your data with other teachers to improve lessons?

Relevant: Have you shared grading data with an entire class? If so, do students seem to be more or less motivated, or about the same?

Time-bound: In the last five years, how many times did you review data from previous academic years?

→ If you are having a conversation with a small business owner of an ice cream shop, you could ask:

Specific: What data do you use to help with purchasing and inventory?

Measurable: Can you order (rank) these factors from most to least influential on sales: price, flavor, and time of year (season)?

Action-oriented: Is there a single factor you need more data on so you can potentially increase sales?

Relevant: How do you advertise to or communicate with customers?

Time-bound: What does your year-over-year sales growth look like for the last three years?

Things to avoid when asking questions

- Leading questions: questions that only have a particular response. Example: This product is too expensive, isn’t it?

This is a leading question because it suggests an answer as part of the question. A better question might be, “What is your opinion of this product?” There are tons of answers to that question, and they could include information about usability, features, accessories, color, reliability, and popularity, on top of price. Now, if your problem is actually focused on pricing, you could ask a question like “What price (or price range) would make you consider purchasing this product?” This question would provide a lot of different measurable responses.

2. Closed-ended questions: questions that ask for a one-word or brief response only. Example: Were you satisfied with the customer trial?

This is a closed-ended question because it doesn’t encourage people to expand on their answer. It is really easy for them to give one-word responses that aren’t very informative. A better question might be, “What did you learn about customer experience from the trial.” This encourages people to provide more detail besides “It went well.”

3. Vague questions: questions that aren’t specific or don’t provide context. Example: Does the tool work for you?

This question is too vague because there is no context. Is it about comparing the new tool to the one it replaces? You just don’t know. A better inquiry might be, “When it comes to data entry, is the new tool faster, slower, or about the same as the old tool? If faster, how much time is saved? If slower, how much time is lost?” These questions give context (data entry) and help frame responses that are measurable (time).

Reports vs Dashboards

Reports and dashboards are both tools used to present information and data, but they have some key differences in terms of their format and purpose.

A report is a document that presents information in a structured format, such as a word processing document or a PDF. Reports are typically used to present detailed information about a specific topic or problem, and are usually intended for a specific audience or purpose. Reports are often used to present the results of an analysis or investigation, and may include text, tables, charts, and other types of data visualizations. They can be long and detailed and usually used for historical analysis and to support decision-making process.

A dashboard, on the other hand, is a real-time, interactive tool that provides a visual summary of key information or metrics. Dashboards are powerful visual tools that help you tell your data story, usually designed to be easy to read and understand, and may include charts, graphs, and other types of data visualizations. They are typically used to monitor performance and track progress over time, and are intended to be used by a wide variety of users, including managers, team leaders, and individual contributors. Dashboards often organizes information from multiple datasets into one central location, offering huge time-savings, and displaying data in a more summarized way, compared to reports. The following table summarizes the benefits of using a dashboard for both data analysts and their stakeholders:

-06d60344.png)

In summary, reports are intended to provide detailed information about a specific topic, and are often used for historical analysis and to support decision-making process, while dashboards are designed to be interactive and real-time, providing a visual summary of key information and metrics and intended to be used for monitoring performance, tracking progress and enabling quick decisions.

→ Often, businesses will tailor a dashboard for a specific purpose. The three most common categories are:

- Strategic: focuses on long term goals and strategies at the highest level of metrics. A wide range of businesses use strategic dashboards when evaluating and aligning their strategic goals. These dashboards provide information over the longest time frame — from a single financial quarter to years. They typically contain information that is useful for enterprise-wide decision-making. Below is an example of a strategic dashboard which focuses on key performance indicators (KPIs) over a year.

.png)

Figure from the Google Data Analytics course on Coursera.

- Operational: short-term performance tracking and intermediate goals. Operational dashboards are, arguably, the most common type of dashboard. Because these dashboards contain information on a time scale of days, weeks, or months, they can provide performance insight almost in real-time. This allows businesses to track and maintain their immediate operational processes in light of their strategic goals. The operational dashboard below focuses on customer service. Resolutions are divided between first call resolution (61undefined).

.png)

Figure from the Google Data Analytics course on Coursera.

- Analytical: consists of the datasets and the mathematics used in these sets. Analytic dashboards contain a vast amount of data used by data analysts. These dashboards contain the details involved in the usage, analysis, and predictions made by data scientists. Certainly the most technical category, analytic dashboards are usually created and maintained by data science teams and rarely shared with upper management as they can be very difficult to understand. The analytic dashboard below focuses on metrics for a company’s financial performance.

.png)

Figure from the Google Data Analytics course on Coursera.

Creating a dashboard

1. Identify the stakeholders who need to see the data and how they will use it

To get started with this, you need to ask effective questions. Below there is a guide for this fase base on the ‘Dashboard Requirements Gathering Worksheet’ from Looker. This is a great resource to help guide through this process again and again.

2. Design the dashboard (what should be displayed)

Use these tips to help make your dashboard design clear, easy to follow, and simple:

Use a clear header to label the information

Add short text descriptions to each visualization

Show the most important information at the top

3. Create mock-ups if desired

This is optional, but a lot of data analysts like to sketch out their dashboards before creating them.

4. Select the visualizations you will use on the dashboard

You have a lot of options here and it all depends on what data story you are telling. If you need to show a change of values over time, line charts or bar graphs might be the best choice. If your goal is to show how each part contributes to the whole amount being reported, a pie or donut chart is probably a better choice. To learn more about choosing the right visualizations, check out Tableau’s galleries:

5. Create filters as needed

Filters show certain data while hiding the rest of the data in a dashboard. This can be a big help to identify patterns while keeping the original data intact. It is common for data analysts to use and share the same dashboard, but manage their part of it with a filter.

Dashboard Requirements Gathering Worksheet:

→ Questions to ask users:

How do you hope data will help you?

What questions are you trying to answer with the data? In other words, what problem are you trying to solve?

What are the three most important metrics that you care about?

How are these metrics defined or calculated?

Will you need to limit the data you see (for example, will you need to only look at results from a specific region or a specific time frame)? How so?

Are all the data sources you need to answer your questions currently available?

Are there any reports you use today that could be provided as examples of what would be useful? If so, please provide them.

If you had all this information in front of you, would you have enough information to take action? What action would you take? Would you need to know anything else?

→ Business requirements:

Who is the target audience for this dashboard?

Who is the primary business owner?

Who is the primary technical owner?

What is the pain point this dashboard aims to address?

What requirements have been expressed for this content?

What actions are the users trying to take based on this data?

→ Technical requirements:

Do you have examples of existing reports that should be replicated?

Do you have product specifications, or requirements for what metrics you need?

Will having this data in (platform used) replace an existing system?

Is the data needed for this dashboard readily available?

Which data sources correlate to this dashboard?

Where does this data live?

What is the delivery method for this data?

Who should have access to this data?

Who should not have access to this data?

Big vs Small Data

Big data and small data are terms used to describe the size and scale of data sets. The main difference between big data and small data is the volume, velocity, and variety of the data (some data analysts also consider veracity — refers to the quality and reliability of the data).

Big data refers to extremely large and complex data sets that are difficult to process and analyze using traditional methods. These data sets are often characterized by high volume (a large amount of data), high velocity (data is generated and collected at a fast rate), and high variety (data comes from a wide range of sources and types). Examples of big data include social media data, sensor data, and log files from internet-connected devices. Processing and analyzing big data often requires the use of specialized tools and technologies, such as Hadoop and Spark, and distributed computing.

Small data, on the other hand, refers to data sets that are smaller and less complex. These data sets can typically be handled using traditional methods and tools, such as spreadsheet software and SQL databases. Examples of small data include data from a single department or business unit, data from a small research study, or data from a single individual.

It's worth noting that there is no definitive threshold that separates big data from small data, and the distinction can be somewhat subjective. Some organization may consider a few Terabytes of data as big data while others may consider as small data. Ultimately, it depends on the context and the data processing capabilities of the organization. The following table explores the differences between big and small data:

.png)

→ Some Big data challenges:

A lot of organizations deal with data overload and way too much unimportant or irrelevant information.

Important data can be hidden deep down with all of the non-important data, which makes it harder to find and use. This can lead to slower and more inefficient decision-making time frames.

The data you need isn’t always easily accessible.

Current technology tools and solutions still struggle to provide measurable and reportable data. This can lead to unfair algorithmic bias.

There are gaps in many big data business solutions.

→ Some Big data benefits:

When large amounts of data can be stored and analyzed, it can help companies identify more efficient ways of doing business and save a lot of time and money.

Big data helps organizations spot the trends of customer buying patterns and satisfaction levels, which can help them create new products and solutions that will make customers happy.

By analyzing big data, businesses get a much better understanding of current market conditions, which can help them stay ahead of the competition.

As in our earlier social media example, big data helps companies keep track of their online presence—especially feedback, both good and bad, from customers. This gives them the information they need to improve and protect their brand.

Working with spreadsheets

→ Some tips and best practices for working with spreadsheets:

Filter data to make your spreadsheet less complex and busy.

Use and freeze headers so you know what is in each column, even when scrolling.

When multiplying numbers, use an asterisk (*) not an X.

Start every formula and function with an equal sign (=).

Whenever you use an open parenthesis, make sure there is a closed parenthesis on the other end to match.

Change the font to something easy to read.

Set the border colors to white so that you are working in a blank sheet.

Create a tab with just the raw data, and a separate tab with just the data you need.

Errors in spreadsheets

→ The following table is a reference you can use to look up common spreadsheet errors and examples of each. Knowing what the errors mean takes some of the fear out of getting them.

.png)

→ TIP: set up conditional formatting in Microsoft Excel to highlight all cells in a spreadsheet that contain errors:

Click the gray triangle above row number 1 and to the left of Column A to select all cells in the spreadsheet.

From the main menu, click Home, and then click Conditional Formatting to select Highlight Cell Rules > More Rules.

For Select a Rule Type, choose Use a formula to determine which cells to format.

For Format values where this formula is true, enter =ISERROR(A1).

Click the Format button, select the Fill tab, select yellow (or any other color), and then click OK.

Click OK to close the format rule window.

Scope of Work (SOW)

→ There’s no standard format for a SOW. They may differ significantly from one organization to another, or from project to project. However, they all have a few foundational pieces of content in common. At a minimum, any SOW should answer all the relevant questions in that will be presented next. Note that these areas may differ depending on the project. But at their core, the SOW document should always serve the same purpose by containing information that is specific, relevant, and accurate. If something changes in the project, your SOW should reflect those changes.

Deliverables: What work is being done, and what things are being created as a result of this project? When the project is complete, what are you expected to deliver to the stakeholders? Be specific here. Will you collect data for this project? How much, or for how long? Avoid vague statements. For example, “fixing traffic problems” doesn’t specify the scope. This could mean anything from filling in a few potholes to building a new overpass. Be specific! Use numbers and aim for hard, measurable goals and objectives. For example: “Identify top 10 issues with traffic patterns within the city limits, and identify the top 3 solutions that are most cost-effective for reducing traffic congestion.”

Milestones: This is closely related to your timeline. What are the major milestones for progress in your project? How do you know when a given part of the project is considered complete? Milestones can be identified by you, by stakeholders, or by other team members such as the Project Manager. Smaller examples might include incremental steps in a larger project like “Collect and process 50% of required data (100 survey responses)”, but may also be larger examples like ”complete initial data analysis report” or “deliver completed dashboard visualizations and analysis reports to stakeholders”.

Timeline: Your timeline will be closely tied to the milestones you create for your project. The timeline is a way of mapping expectations for how long each step of the process should take. The timeline should be specific enough to help all involved decide if a project is on schedule. When will the deliverables be completed? How long do you expect the project will take to complete? If all goes as planned, how long do you expect each component of the project will take? When can we expect to reach each milestone?

Reports: Good SOWs also set boundaries for how and when you’ll give status updates to stakeholders. How will you communicate progress with stakeholders and sponsors, and how often? Will progress be reported weekly? Monthly? When milestones are completed? What information will status reports contain?

→ Basic questions to identify the context in the SOW:

Who created, collected, and funded the data collection?

What are the things that data would have an impact on?

Where is the origin of the data?

When was the data created or collected?

What is the motivation behind the creation or collection?

What is the method used to create or collect it?

Communication is key

→ Before you communicate, think about:

Who your audience is;

What they already know;

What they need to know;

How you can communicate that effectively to them.

→ Some best practices for good data storytelling:

Compare the same types of data: Data can get mixed up when you chart it for visualization. Be sure to compare the same types of data and double check that any segments in your chart definitely display different metrics.

Visualize with care: A 0.01% drop in a score can look huge if you zoom in close enough. To make sure your audience sees the full story clearly, it is a good idea to set your Y-axis to 0.

Leave out needless graphs: If a table can show your story at a glance, stick with the table instead of a pie chart or a graph. Your busy audience will appreciate the clarity.

Test for statistical significance: Sometimes two datasets will look different, but you will need a way to test whether the difference is real and important. So remember to run statistical tests to see how much confidence you can place in that difference.

Pay attention to sample size: Gather lots of data. If a sample size is small, a few unusual responses can skew the results. If you find that you have too little data, be careful about using it to form judgments. Look for opportunities to collect more data, then chart those trends over longer periods.

Properly conduct a meeting

→ Crafting a compelling agenda includes:

Meeting start and end time;

Meeting location (including information to participate remotely, if that option is available);

Objectives;

Background material or data the participants should review beforehand.

→ Before the meeting:

Identify your objective. Establish the purpose, goals, and desired outcomes of the meeting, including any questions or requests that need to be addressed;

Acknowledge participants and keep them involved with different points of view and experiences with the data, the project, or the business;

Organize the data to be presented. You might need to turn raw data into accessible formats or create data visualizations;

Prepare and distribute an agenda. We will go over this next.

→ During the meeting:

Make introductions (if necessary) and review key messages;

Present the data;

Discuss observations, interpretations, and implications of the data;

Take notes during the meeting;

Determine and summarize next steps for the group.

→ After the meeting:

Distribute any notes or data;

Confirm next steps and timeline for additional actions;

Ask for feedback (this is an effective way to figure out if you missed anything in your recap).

That’s it for the second part of the Google Data Analytics course from Coursera. You can find here the first part and soon I’ll be posting the following parts of the course. I also intend to write some more posts on other courses I took (SQL and Python so far), some detailed notes I took (and continue to take) from subjects like data visualisation and probably some short book summaries of my favourite books, with the best quotes and key concepts.

As I mentioned in the beginning, this is mainly with the goal of consolidating all topics I’m interested in learning and also having all of it well structured and put together in one place (this website). So if you find this kind of content useful and wish to read some more, you can follow me on Medium just so you know whenever I post more stuff.